Why do so many companies fail to deploy AI, and is it because employees are using it wrong? A look into the data, strategy, and human barriers stunting the AI revolution.

Despite the immense hype and the promise of a new industrial revolution, a significant majority of companies are struggling to move AI from “pilot” stages to meaningful, scalable production. Industry estimates suggest that 70% to 85% of AI projects fail to deliver on their promised business value, with many stuck in what’s known as “Pilot Purgatory.”

Why is this happening? The problem is rarely the technology itself, but rather a combination of organizational and human factors.

Part 1: The Four Pillars of AI Failure

When we look at why enterprise AI initiatives stall, the reasons typically fall into four main categories.

1. The Data Dilemma (“Garbage In, Garbage Out”) Data is the fuel for AI, but for most legacy companies, this fuel is contaminated. Real-world enterprise data is often messy, incomplete, or siloed in fragmented systems that don’t talk to each other. Without a unified and clean view of data, AI models produce unreliable results, breaking trust immediately.

2. Strategic Misalignment Many companies buy the technology before understanding the problem they’re trying to solve. This “solution in search of a problem” approach leads to flashy demos that look cool but don’t generate real value. Executives often push for “AI” without a clear business case or a realistic path to ROI, leading to projects being axed when they get expensive.

3. Technical & Legacy Debt Modern AI is built for the cloud and APIs, while many large enterprises still run on rigid software from decades past. Integrating a cutting-edge Large Language Model (LLM) with a 20-year-old mainframe is technically difficult, risky, and slow. A model that works perfectly in a controlled proof-of-concept can easily crash when facing real-world scale.

4. The Human & Cultural Factor Perhaps the most critical barrier is the human element. There is a massive global shortage of engineers who know how to deploy and maintain AI systems safely. More importantly, employees often resist adopting AI tools out of fear of replacement or because a single mistake by the AI (a “hallucination”) erodes all trust in the system.

Part 2: The “Fire and Forget” Fallacy

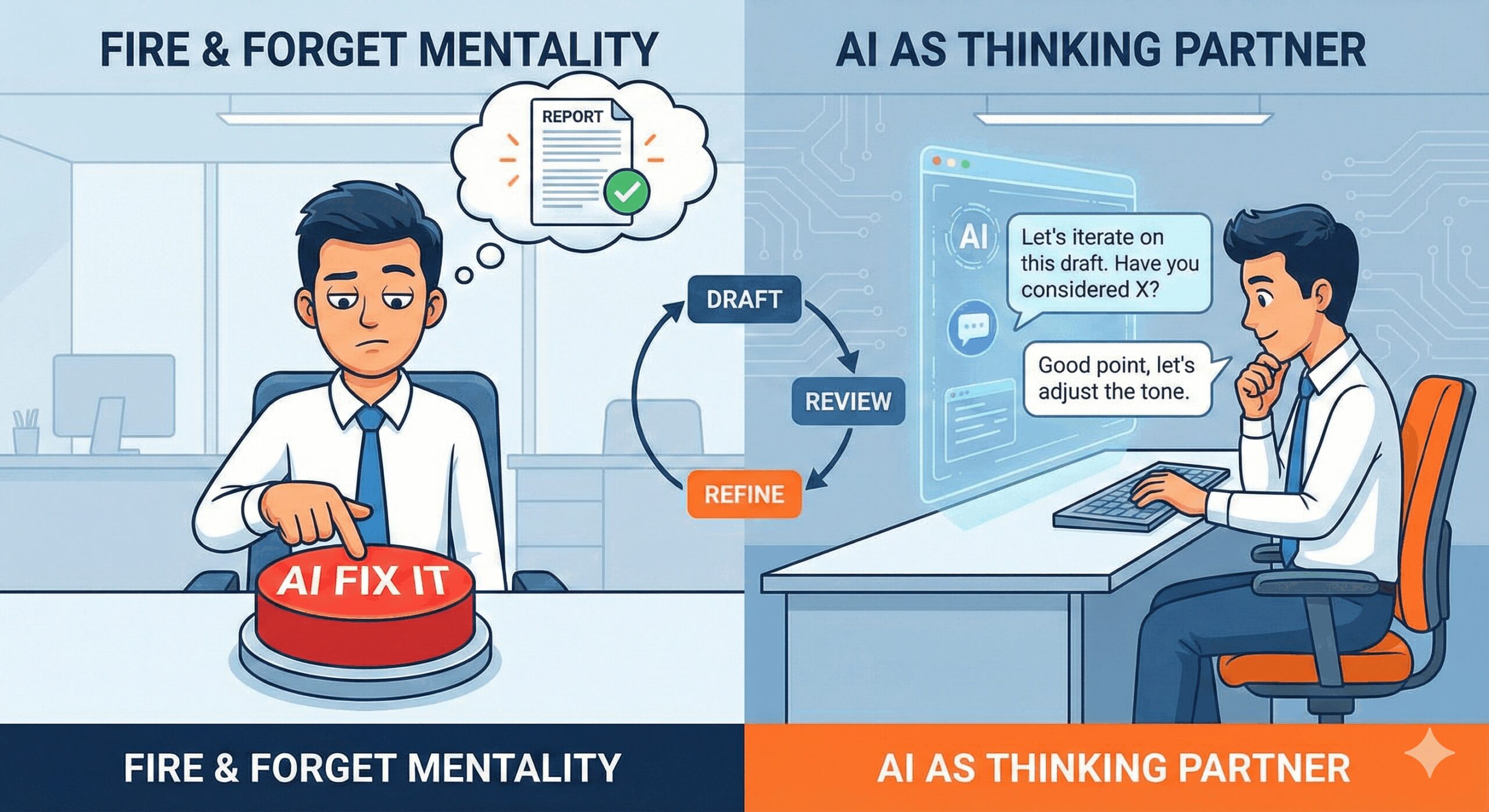

As illustrated above, a primary reason for failure at the individual level is a massive gap in AI Literacy. This stems from a fundamental mismatch between employee expectations and the reality of how Generative AI.

The “Google Mentality” Trap For two decades, we’ve been trained to use software like a search engine: input a keyword query and expect a definitive, factual answer. Employees bring this same “fire and forget” mindset to AI tools. They paste a zero-context prompt (“Write a sales report”) and expect a perfect, final productworks.

.

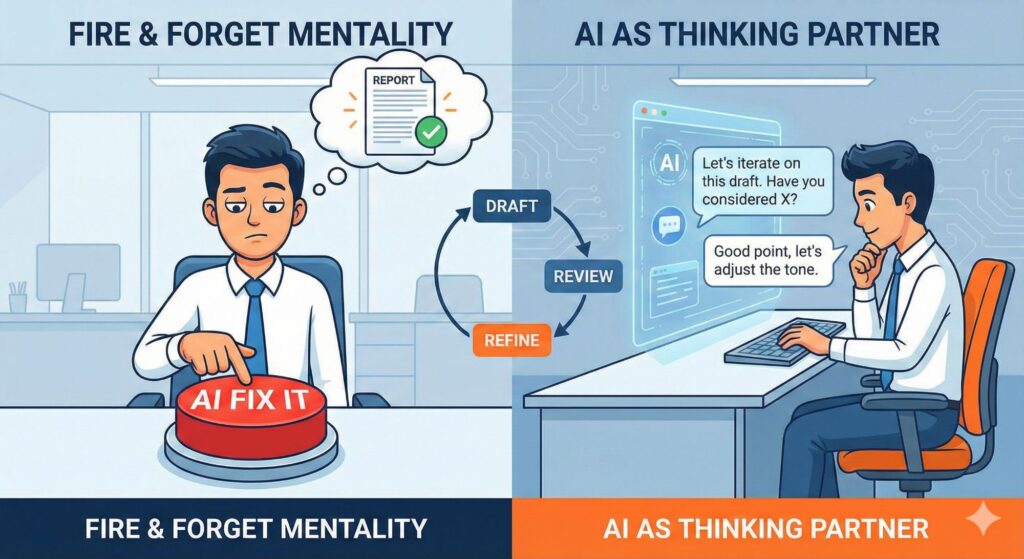

Clerk vs. Co-pilot The reality is that Generative AI is probabilistic, not deterministic. It doesn’t “know” facts; it predicts the next likely word. To get value from it, employees need to shift from viewing AI as a clerk (whom you give an order and walk away) to viewing it as a thinking partner or co-pilot.

This shift requires a new set of skills, including iterative prompting, critical thinking, and fact-checking—a process that initially feels like more work, not less. When the AI produces a generic or flawed response, the employee, stuck in the “fire and forget” mindset, blames the tool (“This is useless”) rather than their own lack of guidance.

Conclusion

The technology has arrived, but the mental model for how to use it hasn’t caught up. Companies are deploying powerful “co-pilots” to a workforce that is still trying to use them as “autopilots.” Until organizations address the data, strategic, and cultural barriers—and, crucially, train their people to move beyond the “magic button” mentality—the promise of enterprise AI will remain largely unfulfilled.